Since ChatGPT has come up, I’ve noticed people around me anthropomorphizing ChatGPT: “…she said…” “…he told me…”. And I was wondering if men or women gave it different genders for different reasons.

So I made a quick survey and asked people to fill it out. I was expecting maybe 50 responses, but after getting 588, I told myself that I ought to write up a report for my findings.

I developed a data analysis pipeline in a Jupyter notebook, with a bunch of graphs and learned about statistical significance tests.

You can find the report here.

Abstract

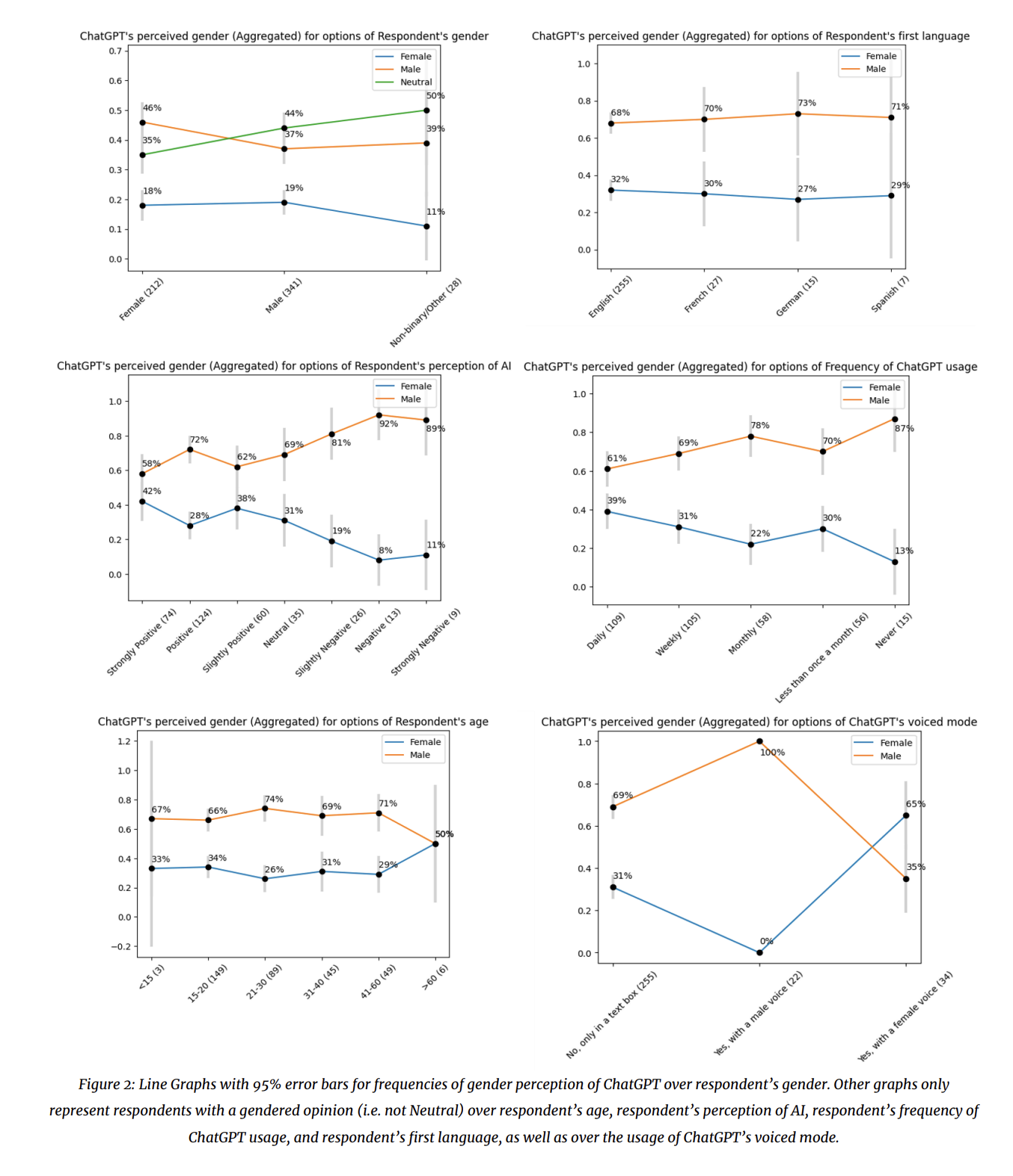

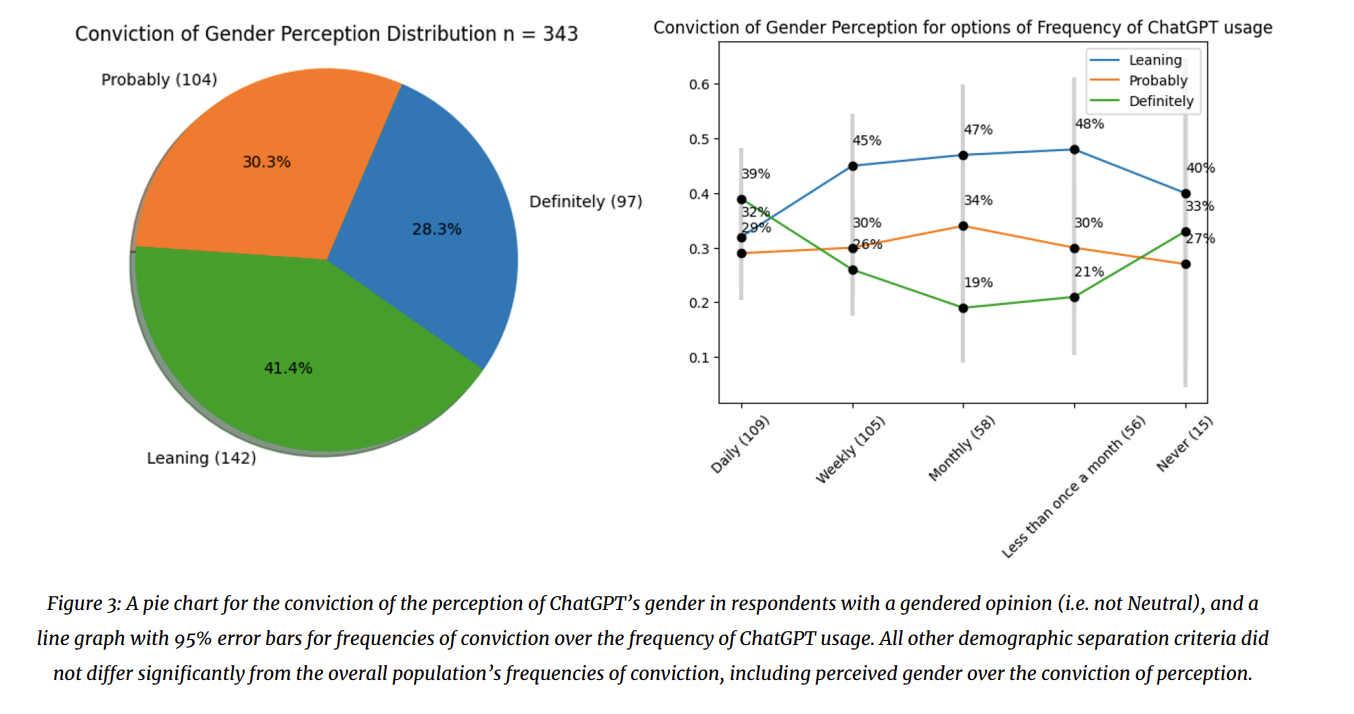

OpenAI’s ChatGPT is often anthropomorphized by its users, who may attribute gendered pronouns to it or subconsciously associate it with a gender. In our study, we explore the relationship between demographic groups and their perceptions of ChatGPT’s gender through a survey of 588 participants, consisting of 7 questions. Our findings reveal a 69% male bias among respondents who expressed a gendered perspective. Interestingly, a respondent’s own gender plays a minimal role in this perception. Instead, attitudes towards AI and the frequency of usage significantly influence gender association. Contrarily, factors such as the respondents’ age or their gender do not significantly impact gender perception. We conclude by discussing the implications of these findings for the application of AI in various industries.

Here are the figures demonstrating the results

You can find the report here, and the dataset as well as the data analysis code on GitHub.

Back